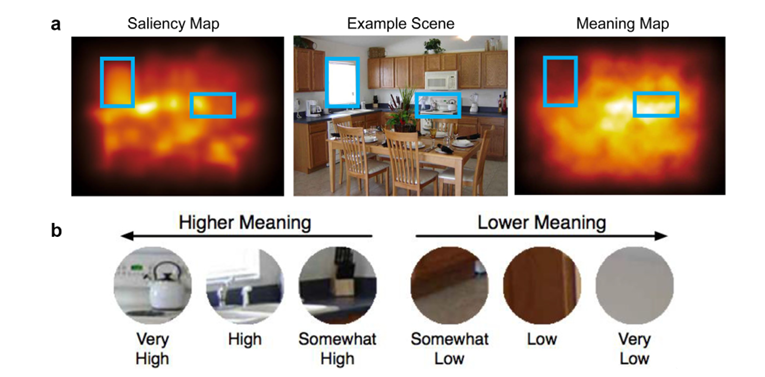

How can we unpack the time course of visual processing in real-world scenes in neural data? It’s a tricky question and, given the speed of visual processing, is one uniquely suited to EEG. In this project Steve and I were interested in seeing if we could put a timestamp on how quickly we could detect information related to both visual saliency (i.e. low-level visual features that differ from their surroundings) and semantic informativeness (as defined by methods developed by the Henderson lab) in neural data.

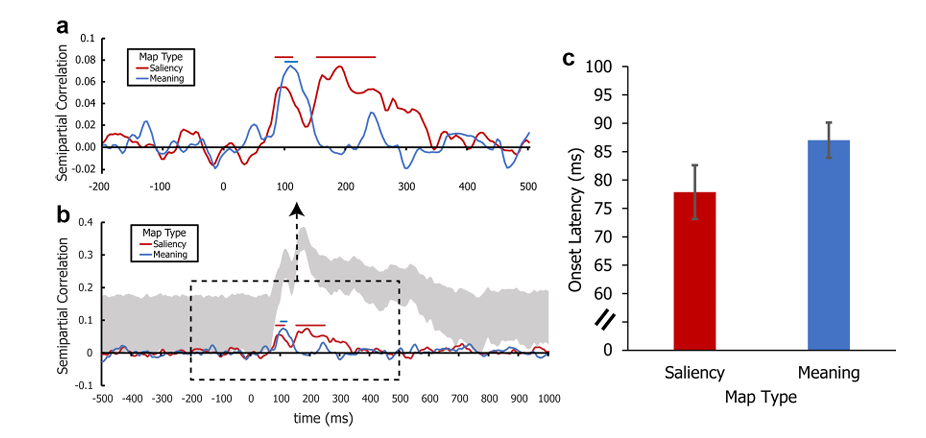

To do this we collected EEG recordings from the scalp from participants viewing these scenes while performing a simple 1-back style memory task. We then adapted representational similarity analysis methods developed by Kriegeskorte, Mur & Bandettini (see here for a quick overview) to get almost milisecond-level precision of how quickly information predictive of the spatial distribution of saliency (~78 ms) and meaning (~87 ms) begin to present in the brain. In other words, as you would expect, the brain rapidly extracts information related to things that “stand out” very quickly but almost as quickly as that information regarding potentially meaningful scene locations begins to emerge. All within 100 milliseconds of viewing a scene. Now that’s pretty darn cool.

Kiat, J.E., Hayes, T.R., Henderson, J.M., Luck, S.J. (2022). Rapid extraction of the spatial distribution of physical saliency and semantic informativeness from natural scenes in the human brain. Journal of Neuroscience, 97-108. doi: 10.1523/jneurosci.0602-21.2021